Greetings dear learner!

In this blog, we are discussing deep learning concept. Deep learning is the technique which mimics the thinking process to take decisions like human beings. Deep learning concepts includes basic models like ANN, CNN, RNN.

Reasons for deep learning:

- If patterns are complex then deep learning neural network can be used.

- For simple data we can use SVM, Regression etc.

- For moderate data i.e. input is more, we can use neural network with small number of layers.

- Number of nodes requires in each layer of the data

- If data is complex

For Free, Demo classes Call: 8605110150

Registration Link:Click Here!

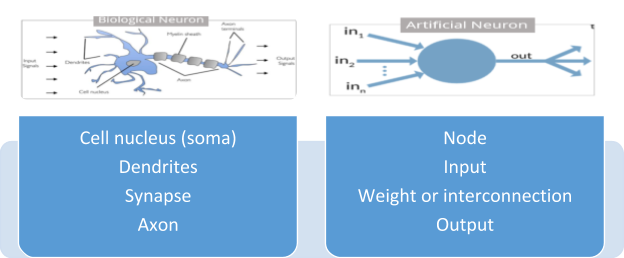

What is Neuron?

- Artificial Neural Networks contain layers of neurons.

- Definition of neuron is a computational unit that calculates a piece of information based on weighted input parameters.

- Inputs accepted by neuron are separately weighted.

- To get the proper output, Inputs are added together and passed through a non-linear function.

- Each layer of neurons detects some additional information, such as edges of things in a picture or tumors in a human body.

- Additional features from the input layer can be detected using Multiple layers of neurons.

Node:

- In Artificial Neural Network nodes are interconnected to each other like the vast network of layers of neurons in a brain.

- Each node is represented by circle. An arrow represents a connection between first neuron to second neuron in the next layer.

Perceptron:

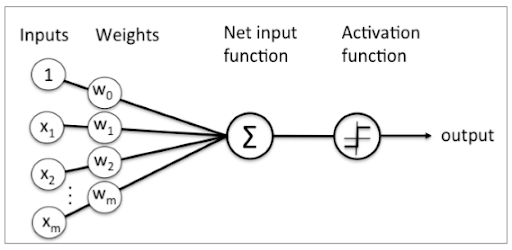

First basic Neural Network unit is called as Perceptron. A Perceptron is a basic neural network unit (an artificial neuron). Perceptron does certain computations to detect features in the input data.

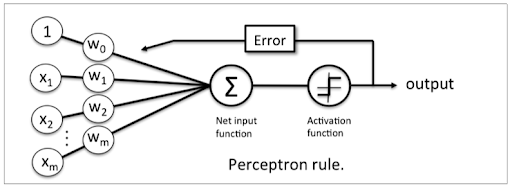

Learning Rule for Perceptron:

- The algorithm would learn to assign optimal weight coefficients automatically.

- Multiplication of the input features and optimal weights for each input are responsible to determine if a neuron fires or not.

- The Perceptron can have multiple input signals, threshold value of summation of all signals defines whether outputs should generate or send it back to adjust weights.

- In the context of supervised learning and classification, this can then be used to predict the class of a sample.

For Free, Demo classes Call: 8605110150

Registration Link:Click Here!

Inputs of Perceptron:

- A Perceptron accepts inputs, multiplication with optimal weight values is calculated, then applies the transformation function. Output from the transformation function is the required output.

- The above figure shows a Perceptron. Perceptron is responsible for a Boolean output.

- A Boolean output is based on inputs such as salaried, married, age, past credit profile, etc. It has only two values: Yes and No or True and False.

- The summation function “∑” multiplies all inputs of “x” by weights “w” and then adds them up as follows:

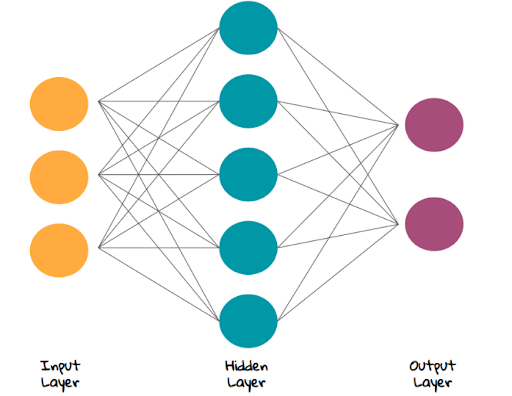

Artificial Neural network (ANN):

First layer of neural network is

Input layer:

Inputs are passed into the first layer. Individual neurons receive the inputs, with each of them receiving a specific weight value. Once the input and weights are calculated with summation function, an output is produced based on these values.

Hidden Layer: Neural network is same as any other network.

There are inter connected web of nodes called as neurons.

Ages are used to join different neurons together.

Function of the neuron is to receive set of inputs, process the complicated calculations, use the output to solve the problem.

Output layer: After passing through input layer, multiple hidden layer result of the data is reflected in output layer

For Free, Demo classes Call: 8605110150

Registration Link:Click Here!

What are weights and bias?

- Each node has same classifier and get activated at particular sequence.

- If every layer of hidden layer receives the same input then question is how the output differs for each layer.

- The reason behind the each different output is the weightage and bias

- Each edge is having unit weight and each node is having unique bias.

- Hence combination used for the each activation is also unique.

- Prediction accuracy of the model depends on the weights and bias

- Process of improving accuracy is called training which is same as any machine learning model.

- Cost = generated output – actual output

- Purpose of the training is to reduce the cost.

- If training is done on proper basis then neural network has the potential to accurate prediction.

Convolution Neural Network (CNN):

In 1995, Yann LeCun, professor of computer science at the New York University, introduced the concept of convolutional neural networks. Convolution Neural Network is mostly used for classification problem. But the question is why do we need convolution network if we’ve artificial neural network? And the answer is features extraction.

What is the features?

- Features are color, shape, orientation, object edge, pixel location etc.

- As we are extracting more features, classification can be done more correctly.

Recurrent Neural Network (RNN):

Basic functionality of RNN is forward propagation over time.

Why to use RNN:

Problem which we can solve using RNN is as follows:

NLP: is the natural language processing, includes techniques like bag of words, TF-IDF, WORDZVEC. What exactly these techniques are doing? These techniques convert basic text data into vectors.

Sequence of the data

N represents for number of sample D represents number of features T is the sequence length i.e. time steps in the sequence. Now data represents in the 3D format N * T * D In the GPS data what is N, T and D N is the single trip to work D = 2 i.e. latitude and longitude T= time from one location to another location in seconds if it’s 30 min then T is 1800

For Free, Demo classes Call: 8605110150

Registration Link:Click Here!

How can we define axis for data related to Stock price

Vertical axis is price and horizontal axis is time. here we are having window of time T. T =10 to predict the next value N: is the number of windows in time series If we have sequence of 100 stock prices, how many windows of length 10 can be taken from the sequence 100-10 +1 =91

If L is the length of sequence, and window is size = T then there are L-T +1

We are not only responsible for single stock. Suppose we are having 500 different stocks: D= 500 T=10

Then sample size id D*T = 500 * 10 = 5000

Details related to RNN, CNN will discuss in the next upcoming blogs.

Author-

Amrita Helwade | SevenMentor Pvt Ltd.

Call the Trainer and Book your free demo Class now!!!

© Copyright 2019 | Sevenmentor Pvt Ltd.