Gradient Boosting (XGBOOST) Tree

Introduction to Gradient Boosting

- Gradient Boosted Trees is one of the most commonly used “Boosting” algorithms. This is a type of Supervised Learning algorithm.

- There are different boosting algorithm that exists. Here, we will only consider the Gradient Boosting algorithm.

- Boosting is a collection of weak learners combined to form a stronger learner.

- Gradient Boosting can be applied for both “Regression and Classification”

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

- Gradient Boosting is a type of Ensemble Learning.

- Ensemble Learning is the process by which multiple models, such as classifiers, and experts, are generated and combined to solve a particular problem.

- Ensemble Learning is primarily used to improve classification, prediction, function approximation, etc.

Enroll today and join the ranks of top Machine Learning Classes in Pune

- The Gradient Boosting algorithm can be classified into two key features:

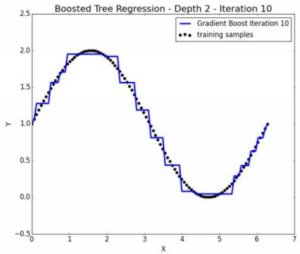

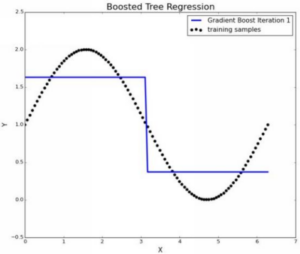

- Multiple Iterations

- Each subsequent iteration focus on the parts of the problem that the previous iteration got wrong.

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

Let’s understand the algorithm by taking a very simple example.

- Suppose you are a Data Science teacher teaching a class of 20 students from different educational backgrounds.

- On day 1, you know nothing about the quality of those 20 students so you start with standard/basic class (like basic stats)

- On day 2 or subsequent days, you have some background of how well each student is doing. So, you will focus more only on those students who are very weak in Data Science or the worst students. Here, your goal is to make the best students perfect but you will bring vulnerable students to average.

- So as a good teacher, you will first concentrate on weak students and will try to make them average.

For Free, Demo classes Call: 7507414653

Registration Link: Click Here!

- Now let’s relate this example to Boosting.

- Simple, the iterations take place at each subsequent day at each new class. • On each subsequent day, you will find weak students (errors) and will improve them.

- Another way to interpret is, a group of daily instructions (the weak learners) can be combined to produce a good quality course (the strong learner)

Looking to boost your career in the exciting field of machine learning? Then look no further than SevenMentor’s comprehensive Machine Learning Training in Pune!

Author:

Call the Trainer and Book your free demo Class For Machine Learning Call now!!!

| SevenMentor Pvt Ltd.

© Copyright 2021 | Sevenmentor Pvt Ltd.